Spotify with ChatGpt

This project marks my initial foray into AI development from last summer. It's a relatively small and straightforward project, ideal for those looking to extend ChatGPT's functionality to other applications. I developed a GPT that can generate and add songs to my Spotify playlists.

The goal

The aim of this project was to enable ChatGPT to access my Spotify playlists and utilize generative AI to create new playlists.

The solution

- Integrate new features into ChatGPT using Actions.

- Connect multiple actions to enhance application functionality.

- Convert OAuth2 to Bearer access tokens.

- Overcome robots.txt restrictions.

The process

To integrate GPT with Spotify, I needed to facilitate communication between ChatGPT and Spotify. GPT Actions allow GPT to call other endpoints through an OpenAPI schema, but only with OAuth or Bearer tokens. The challenge was that Spotify's OpenAPI requires OAuth2.

To resolve this, I developed middleware at spotify.gptintegrations.app using Laravel. This middleware handles the OAuth2 authentication and proxies it through an endpoint with Bearer access.

Instead of calling Spotify's endpoints directly, I created a proxy middleware with identical endpoints. The OpenAPI schema uses the same endpoints but with gptintegrations.app/api/v1 as the base URL, as shown below.

Complete OpenAPI Schema: spotify.gptintegrations.app/schema

openapi: 3.0.3

info:

title: GPTIntegrations proxy api for Spotify Web API - Full Playlist and Search Management

description: GPTIntegrations proxy for Spotify api to connect with chatgpt endpoints for searching, creating, updating,

deleting, and managing playlists, including user authentication.

version: 1.0.0

servers:

- url: https://gptintegrations.app/api/v1

paths:

/search:

get:

operationId: searchSpotifyItems

x-openai-isConsequential: false

summary: Search for Item

description: Get Spotify catalog information about albums, artists, playlists, tracks, shows, episodes or audiobooks

that match a keyword string.

tags:

- Search

security:

- bearerAuth: []

parameters:

- name: q

in: query

required: true

schema:

type: string

- name: type

in: query

required: true

schema:

type: array

items:

type: string

enum:

- album

- artist

- playlist

- track

- show

- episode

- audiobook

- name: market

in: query

required: false

schema:

type: string

- name: limit

in: query

required: false

schema:

type: integer

- name: offset

in: query

required: false

schema:

type: integer

- name: include_external

in: query

required: false

schema:

type: string

enum:

- audio

responses:

"200":

description: Search results matching criteria

"401":

description: Unauthorized

"403":

description: Forbidden

"429":

description: Too Many Requests

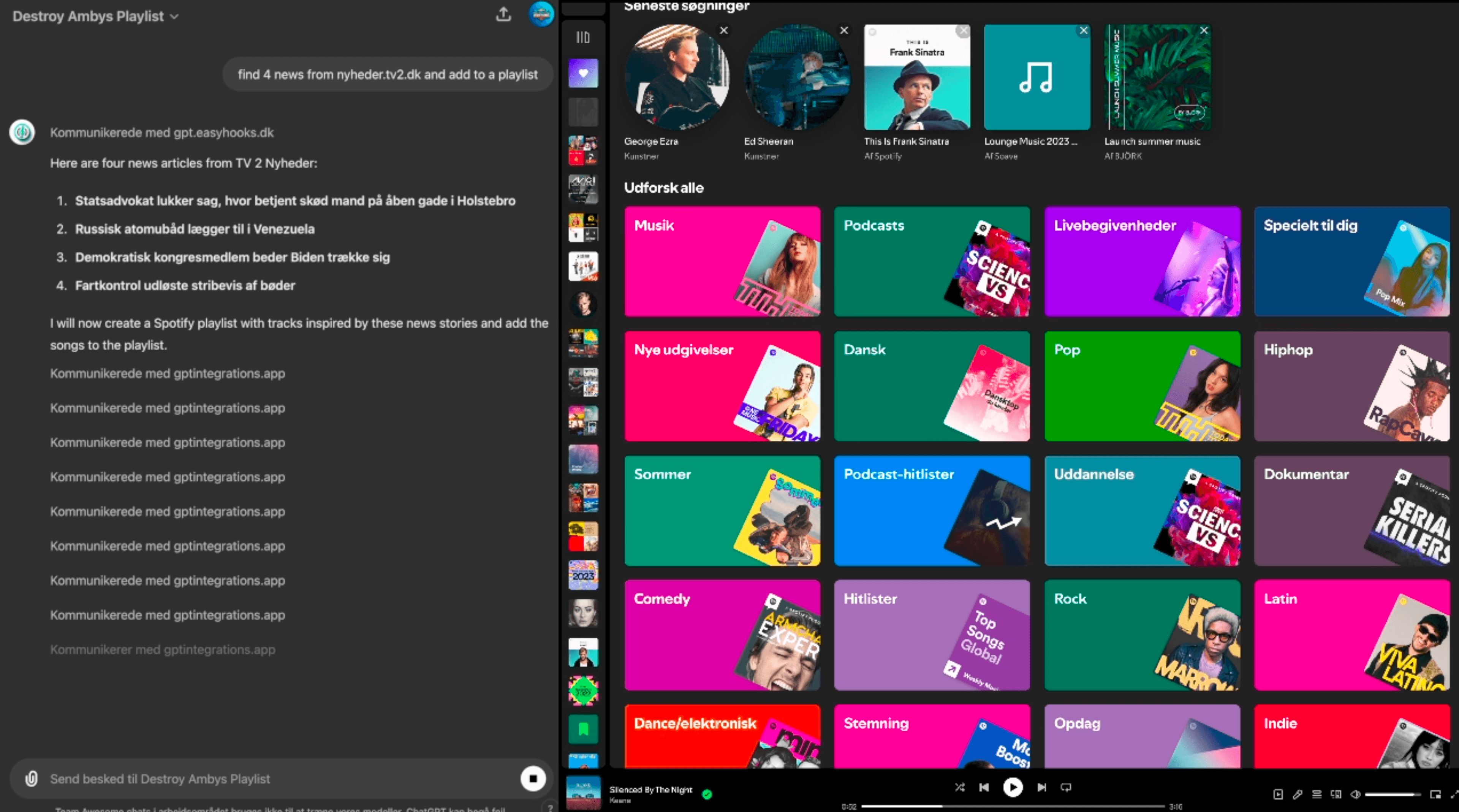

With this setup, I added the action to my GPT, allowing it to create playlists from various websites or simply use GenAI's creativity.

In December 2023, several websites introduced robots.txt restrictions that blocked ChatGPT from crawling their sites.

To circumvent this, I created a worker on Cloudflare using Wrangler and another OpenAPI schema to connect GPT to the endpoint.

Since action responses are limited to 400KB, I spent some time tweaking the worker by removing unnecessary tags and lines.

The optimal configuration was achieved with:

var src_default = {

async fetch(request, env, ctx) {

const url = new URL(request.url);

if (request.method === "POST" && url.pathname === "/scrape") {

const requestBody = await request.json();

let scrapeUrl = requestBody.url;

if (!scrapeUrl) {

return new Response("URL parameter is missing", { status: 400 });

}

if (!scrapeUrl.startsWith("http://") && !scrapeUrl.startsWith("https://")) {

scrapeUrl = "https://" + scrapeUrl;

}

try {

const response = await fetch(scrapeUrl);

let content = await response.text();

content = content.replace(/<script[^>]*>([\S\s]*?)<\/script>/gmi, "");

content = content.replace(/<style[^>]*>([\S\s]*?)<\/style>/gmi, "");

content = content.replace(/<head[^>]*>([\S\s]*?)<\/head>/gmi, "");

content = content.replace(/<img[^>]*>/gmi, "");

content = content.replace(/<link rel="stylesheet"[^>]*>/gmi, "");

content = content.replace(/<picture[^>]*>([\S\s]*?)<\/picture>/gmi, "");

content = content.replace(/<nav[^>]*>([\S\s]*?)<\/nav>/gmi, "");

content = content.replace(/<path[^>]*>([\S\s]*?)<\/path>/gmi, "");

content = content.replace(/\sclass="[^"]*"/gmi, "");

content = content.replace(/\sclass='[^']*'/gmi, "");

content = content.replace(/\sid="[^"]*"/gmi, "");

content = content.replace(/\sid='[^']*'/gmi, "");

content = content.replace(/\sstyle="[^"]*"/gmi, "");

content = content.replace(/\sstyle='[^']*'/gmi, "");

content = content.replace(/\sdata-props="[^"]*"/gmi, "");

content = content.replace(/\sdata-props='[^']*'/gmi, "");

content = content.replace(/\shref="[^"]*"/gmi, "");

content = content.replace(/\shref='[^']*'/gmi, "");

content = content.replace(/\bhttps?:\/\/\S+/gmi, "");

return new Response(content, {

headers: {

"Content-Type": "text/plain;charset=UTF-8"

}

});

} catch (error) {

return new Response("Failed to fetch the website content", { status: 500 });

}

} else {

return new Response("Endpoint not found", { status: 404 });

}

}

};

export {

src_default as default

};

With the scraper operational, I could add a line in the GPT commands: “If you get denied by robots.txt, then try the URL through the scraper.”

The final touch was creating an OpenAPI schema for another middleware I developed years ago, updatebot.dk, which converts messages for different software. I configured it to send a notification to a Discord channel when a song or playlist is added.

Conclusion

This project successfully demonstrated the integration of ChatGPT with Spotify, allowing for automated playlist creation and management. The solution involved creating middleware to handle OAuth2 authentication, setting up a proxy to bypass robots.txt restrictions, and fine-tuning the system to ensure seamless operation. These steps resulted in a functional and innovative application that leverages AI to enhance the Spotify experience.

Thank you for taking the time to read about this project. Stay tuned for more exciting blogs where I continue to push the boundaries of AI capabilities!